Note: This post is third in a series where I share what I’ve learned starting and producing the Recompiler podcast. If you haven’t already, start with the introduction. This post follows Step 1: Identify a Topic, Point of View, and Structure.

Step 2: Gather your recording equipment: Computer, microphone, audio interface, headphones for monitoring.

There are numerous ways to record and produce podcasts. Not unlike photography, you can put together a digital recording rig for very little or you can spent thousands or tens of thousands of dollars on expensive, high-end gear. I recommend that for your first podcast endeavor, you get the best quality gear you can comfortably afford. If you end up doing a lot of podcasting, and find a way to fund it, you’ll surely want to upgrade your equipment. And by then, you’ll have more experience to guide you.

Below I give an overview of what you’ll need and explain what I picked for the Recompiler. For a more detailed guide, check out Transom’s excellent Podcasting Basics, Part 1: Voice Recording Gear.

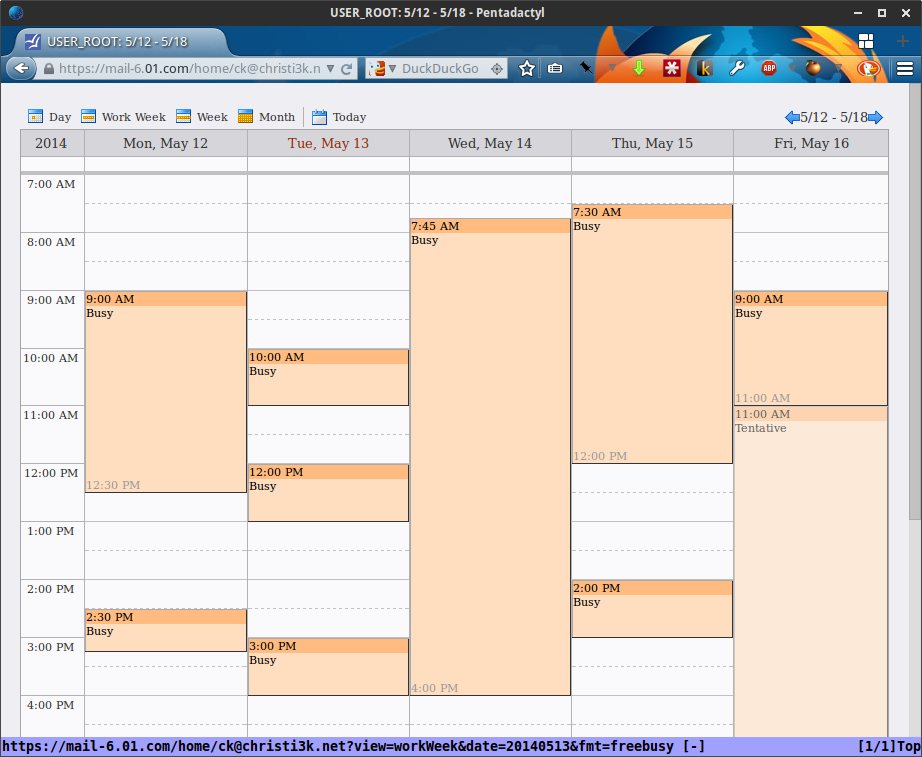

Computer or portable recorder too?

First, you’ll need to decide how you’ll be recording your audio: via a computer or a portable recorder. If you’ll mostly be doing field interviews or otherwise traveling a lot, a portable recorder might make sense. The downside is that you’ll still need a way to edit and publish your podcast and that requires a computer. For the Recompiler, I first thought I’d be doing a lot of field recording so I picked up a Sony PCM-M10 ($200 at the time). While I use it for other things, I haven’t ended up using it much for the podcast. Instead, I record at my desk directly into my refurbished MacMini. The good news is that you don’t need a high-end machine to record and edit podcast audio. There’s a good chance that a computer you already have available to you will be sufficient. And, audio recording and editing software is available for Windows, macOS, and Linux.

Microphone and audio interface

Being an audio medium, you’ll need to have a way to record audio. Most all modern computers have microphones built in. You can certainly start with whatever you have available to you. If you can’t afford to buy anything new, and you are ready to get started, don’t let the lack of an upgraded microphone stop you. A smart phone is also another good getting started option, especially if you have an iPhone. Most portable digital audio recorders have microphones built in as well.

However, if you do have a couple hundred bucks to spend, I recommend getting a better external microphone along with an audio interface.

External microphones generally connect via USB or XLR. Some have both. If the microphone has USB, you connect it directly to your computer with a USB cable like you would an external hard drive or non-wifi printer. If the microphone has XLR, you need an audio interface between the microphone and the computer. The microphone connects to the audio interface via an XLR cable, and the audio interface connects to the computer with a USB cable. The XLR setup is overall more complicated and more expensive, but generally provides better quality.

There are several USB microphones aimed at first-time podcasters. When I recorded In Beta, I used a refurbished Blue Yeti. I did not get the best of results. 5×5 nearly always complained about my audio quality. And, in general, I’ve had trouble with USB-based microphones, where I often have a ground-loop hum, which everyone but me can hear. As with all things, YMMV. Some folk swear by the Yeti, and other USB products from Blue. Rode also makes a USB microphone, but it’s more expensive than Blue’s offerings.

Having given up on USB microphones by the time we were planning the Recompiler, I looked for an affordable XLR solution. I settled on the Electro-Voice RE50N/D-B, a hand-held high-dynamic microphone with the Focusrite Scarlett 2i2 audio interface. My choice of microphone was based on: price (was in my budget), ability to use it in the field as well as in the “studio”, and that it would work with my chosen audio interface without extra equipment. I don’t recall how I settled on the Focusrite. I think it was a combination of a recommendation via Twitter, price, and brand (Focusrite seemed well-known and dependable). I’m happy with both choices. The Scarlett 2i2 worked right away without fuss and I get decent sound from the RE50N/D-B in a variety of environments.

If you’re just getting started, I definitely recommend the Focusrite Scarlett 2i2 ($150 new) if you want to be able to record a guest or other audio source in studio, or the Scarlett Solo ($100 new) if you just need to record from one audio source. Look on eBay for used equipment to save money.

As far as microphone, there are too many options and preferences for me to feel comfortable giving a specific recommendation. If you’re just starting out, I recommend reading through reviews on transom.org and then getting the best microphone you can comfortably afford, knowing that it won’t be the last mic you buy if you stick with podcasting.

Other accessories

Unless you’re doing field interviews exclusively, you’ll need to get something to hold your microphone. This can be a tabletop or floor stand, or a desk-mounted arm. You might also want to include a pop filter and/or a shock mount. The Transom article I first mentioned earlier gives a good overview of options for these.

For the Recompiler, I use the RODE PSA1 ($100) as a microphone mount and the simple foam microphone cover that came with the RE50N/D-B. I haven’t needed a shock mount because, I think, the RE50N/D-B is designed as a hand-held mic and doesn’t pick up a lot of vibration. I’m also careful not to bump it, the mic boom, or my desk while I’m recording.

Headphones

Don’t forget to get and use a decent pair of headphones while you’re recording and editing your podcast audio.

For the Recompiler, I picked up a pair of Sennheiser HD 202 II ($25) which are dedicated to audio recording and editing. In fact, they never leave my desk. That way I’m never scrambling to find them when it’s time to work. The Sennheisers I have aren’t amazingly awesome, but they were inexpensive and get the job done.

Whatever you pick, aim for headphones designed for studio monitoring, that are over-the-ear, do not have active noise cancellation, and do not have a built-in mic. If you do end up using headphones with a built-in mic, double-check that you are not recording audio from that mic. There’s nothing more disappointing that recording a whole segment or show only to realize you used your crappiest microphone.

If you have it in your budget, you might consider the Sony MDRV6 ($99).

Questions or comments?

Please get in touch or leave a comment below if you have questions, comments, or just want encouragement!

Next post…

Stay tuned for the next post in this series!